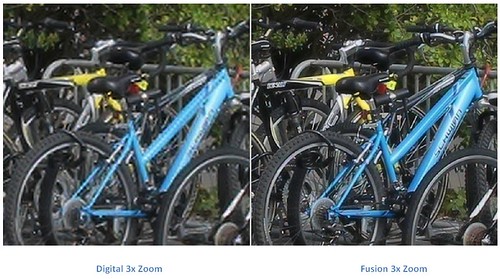

Qualcomm has an interesting demo at MWC that uses their Snapdragon 800 processor coupled with two lenses to simultaneously take a photograph and merge the exposure into a single image.

Qualcomm says it significantly improves zoom capabilities, allowing users to optically zoom 3x while taking 13MP, and zoom five times during full HD video.

Core Photonics has developed a family of camera reference designs for single, dual and quad aperture cameras for computational photography. They enable a variety of benefits such as true optical zoom, low profile cameras, high dynamic range, refocusing, gesture control and other features.

Dual, fixed lenses coupled with “computational photography” are expected soon in smartphones. The concept has already been adopted by police states.

Gorgon Stare drones are being developed and tested on the MQ-9 Reaper at Eglin Air Force Base, FL.

The Autonomous Real-time Ground Ubiquitous Surveillance – Imaging System (ARGUS-IS) uses 92 cameras at once, says Wired Magazine, compared to Gorgon Stare’s measly dozen. The 1.8 Gigapixel video sensor is made up of 368, 5-megapixel video chips mounted in four separate cameras. It requires a 10 TeraOPS processor to crunch the 27 Gigapixels per second at a frame rate of 15 Hz.

Posted on Tue, 25 Feb 2014 19:21:56 +0000 at http://www.dailywireless.org/2014/02/25/...al-at-mwc/

Comments: http://www.dailywireless.org/2014/02/25/.../#comments